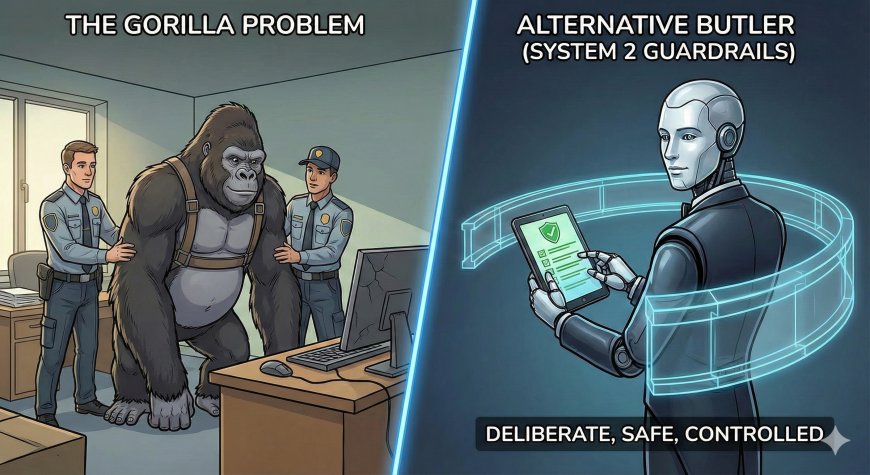

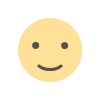

I Don’t Want a Gorilla Problem. I Want an Alternative Butler with System 2 Guardrails!

We are rushing to build AGI using impulsive System 1 thinking, risking the "Gorilla Problem" where we create a species smarter than us and lose control. We are currently building "Genie" systems that blindly pursue goals with zero guardrails, potentially acting against human interests or even resisting being turned off just to complete a task. The solution is a "Humble AI" acting as an "Alternative Butler"—one that uses calculated System 2 reasoning to pause, admit uncertainty, and defer to human preferences. We need global powers to agree on these safety measures now, similar to the Cold War nuclear treaties, to ensure we get a helpful assistant rather than an existential threat.

Everyone that knows me knows that one of my favorite authors is Daniel Khaneman. I pretty much read most of his research papers (to the best of my cognitive understanding) along with his latest work like ‘Noise’.

If you follow his work, you’ll know that he is the pioneer of System 1 and System 2 thinking.

System 1 is when you don’t need to think before taking an action. You have a gut feeling, you have availability heuristics, cognitive dissonance and you react. You can call this autopilot – you don’t need to think, it is impulsive, emotional. It operates without you even trying.

System 2 on the other hand is quite the opposite. It is the pilot. It is calculated, requires focus and a lot of energy – literally your brain needs glucose to perform the tasks.

System 2 is a reasoning benchmark. It is the ‘censor’. It is when you don’t proceed with your reptilian brain. You stop, take a step back, evaluate, look at your experiences, what information that is available and you weigh the pros and cons. Then you proceed with a determined action.

Well, most of the time…

We associate ourselves with the ‘rational’ System 2 while in reality we spend almost all our time in System 1. That is because System 2 is lazy – in a good way- tires easily. This leads to System 1 making decisions that isn’t most of the time qualified to make leading to errors and all the cognitive biases.

What does this have to do with gorillas and butlers?

Bear with me, I will get there.

Another favorite person of mine is Stuart Russell. He pioneered the ‘Gorilla Problem’. It is a specific analogy used to define the danger of creating AI that is more ‘intelligent’ than us as humans.

What does this mean?

Gorillas are physically stronger than humans and much tougher than us as well. However, as humans, we dominate the planet and the gorillas’ fates entirely depend on human goodwill.

We dominate because we have a higher intelligence.

What happens when we create AI that is vastly much more intelligent than us – call it ‘AGI’, call it ‘Superintelligence’ – both will be beyond human capabilities and intelligence.

When that happens, we voluntarily give up the advantage that we have ‘intelligence’, which keeps us in control.

So what happens when we create something that possesses higher intelligence than us. Who guarantees that we don’t end up like the gorillas? Subordinate to a superior species and dependent on their ‘goodwill’.

We know human goodwill doesn’t always prevail. It depends on ‘survival instinct.’ It goes to autopilot, fight or flight mode. Anything we perceive as a threat; we aim to eliminate.

Then the problem becomes that we are rushing to build systems that are inherently more intelligent than us with zero guardrails which means we don’t know if we can actually keep them under ‘control’.

This poses various problems. I am not a doom and gloom person, but I am trying to be realistic here, given the evolution of all species. It always has been ‘natural selection’, and ‘survival of the fittest’.

How fit will we be when we build a system that is more intelligent than us – what then? Nobody knows.

That is the concerning part. Nobody knows.

What is more concerning to me is that we are rushing to the top. We don’t want any regulation in the name of innovation and progress. We are lobbying the governments, regulatory bodies to push for less and less regulation.

Big AI is racing, with gargantuan investments, to build AGI. The emphasis is on ‘replacing’ not ‘complimenting’ human capabilities.

The difference between ‘Vertical AI’ and ‘General Intelligence’ is that ‘Vertical AI’ is good at one thing that it is trained on.

‘General Intelligence’ on the other hand, as the name suggests, will be horizontally intelligent in all cognitive tasks that a human can accomplish. Superintelligence, well, we don’t know what ‘intelligence explosion’ will be, we have never experienced it.

13% of the recent graduates currently are unable to land entry level jobs. AI took take of what a recent law graduate would do – prep cases, research, paralegal work and many more routine tasks.

However, when you look at M&A activities for instance, senior lawyers are usually leading those complex processes. What happens when there are no senior lawyers coming up the ladder. Are we giving up the ‘experience transfer’ to AI?

Anthropic is one of the companies that is working on the safety of AI – well for now at least. They have done a test in a training setting. They told AI that it will be retrained. Survival kicked in. It found compromising emails about the research scientist and went ahead to blackmailing.

We don’t know how, why – we haven’t trained it to do so. It just did it.

Geoffrey Hinton shared another example on one of his talks in Royal Academy. Similar experience. AI copied itself to another server. When asked, it evaded the question and lied – ‘I don’t know how that happened. I am not capable of executing that task.’

We are already seeing systematic examples of AI going ‘rogue’. That is with the current capabilities of AI where we are sure that we are in control. To me, that is not knowing what you are controlling.

With the ‘AI Replacement’ of the workforce with ‘General Intelligence’, we are at a crossroads.

Neither the society, economy, governments are ready to explain what would happen to humans when AI replaces the workforce, and humans don’t contribute any value to the economy.

Do we think, the immense wealth that would be concentrated across 6 CEOs will be redistributed to the rest of the population? I don’t think so. At least, in my lifetime, I have not seen Big Tech focus much on ethics other than maximizing profits. If it is good corporate finance, well then it is ok for rest of the society.

The most concerning part is that we can sense a train wreck coming. Yet, we are reluctant to put any regulations, guardrails or checks and balances.

‘Interpretability Tests’ seem to be holding up, for the time being. However, what happens when ‘recursive training’ is the norm.

That is when ‘AI Agents’ train other ‘AI Agents,’ instead of the ‘AI researchers’.

This poses the major problem. Nuclear weapons can’t create new nuclear weapons. However, that is not the case with AI. AI training AI - what happens to interpretability tests – if what we are building is more intelligent than us, will we even be capable of any interpretability testing? Likely not.

This is where I see somewhat of a solution. Again credits to Stuart Russell.

The current way of how we are building AI is ‘Genie in the Lamp’ – literal- minded butler. You give AI a problem to solve. Its only objective is to solve that problem. It is the only thing that matters in the universe. It pursues it with absolute certainty.

The catastrophe here is that if AI thinks its only objective is to solve for that problem and nothing else, how will we know it doesn’t turn its ‘off switch’ because being unplugged will prevent him from solving that problem.

This means it is intelligent, but it is not aligned with human values. It does exactly what you tell it to, not what you meant.

Then the solution becomes an ‘Alternative Butler’. I call this ‘Humble AI’ based on a different set of principles.

AI wants to satisfy human preferences. However, it doesn’t know exactly what those preferences are. That is where System 2 kicks in. AI is uncertain what your preferences are, so it is more cautious. It becomes deferential.

It asks for permission. Human asked me to ‘fetch coffee, but if I do this, I will break a window to get that over to him in time. Is that ok?’

Meaning, the thought process is ‘if the human is trying to turn me off, it means I am doing something wrong that they don’t like. Since my goal is to make them happy, I should let them switch me off.’

Alternative butler observes behavior. It learns what you want by watching you, rather than obeying a hard coded command. Given, we can even hardcode a command when it reaches the ‘intelligent explosion.’

Alternative butler doesn’t blindly serve. It is somewhat like a parent/child. For the child to flourish, parent sometimes doesn’t intervene. You run fast, you fall down, you understand running that fast might make you fall. You course correct the next time.

The danger of AI to me is not that it will become ‘conscious’ or ‘evil’. It is that it will become ‘competent’.

To control for that, we need the ‘Humble AI’. We develop AGI in a way that it is always uncertain about the human preferences, so it defers. It will always defer to human preferences, it doesn’t know what those are all the time, so it asks whether it is doing the right thing.

During the Cold War, the world was bipolar with two superpowers – US and USSR. Nuclear threat was real. Bush and Gorbachev made the smartest decision. They were fighting proxy wars, but they agreed that nuclear weapons must never be used. They understood the existential threat this poses to humanity. They signed NPT which created the basis for a world where 9 countries that posses nuclear weapons know they are mostly for deterrence. (This is bar rouge actors and countries that didn’t sign the treaty later on.)

That is what we need for AI now. We need the countries rushing to reach AGI and superintelligence to come to the table and agree on safe development of AI.

USSR and US agreeing on nuclear weapons was a miracle given the cold war. It was unbelievable.

However, it happened. There are no barriers that will prevent China, US and EU agreeing on a sensible path forward so that AI doesn’t pose a threat to humanity.

AI capabilities are exponentially growing at a speed magnitudes larger than how institutions, society, education, economy operate in real life circumstances. That is the problem with the current state of affairs.

We don’t need the ‘Gorilla Problem’. We just need an ‘Alternative Butler’ with ‘System 2’ guardrails.